FROGS Core

Galaxy

- Get Started ▾

- Reads processing

- Remove chimera

- Cluster/ASV filters

- Taxonomic affiliation

- Phylogenetic tree building

- ITSx

- Read demultiplexing

- Affiliatio filters

- Affiliation postprocessing

- Abundance normalisation

- Convert Biom file to TSV file

- Convert TSV file to Biom file

- Cluster/ASV report

- Affiliation report

Main tools

Optional tools

CLI

- Get Started

- Reads processing

- Remove chimera

- Cluster/ASV filters

- Taxonomic affiliation

- Phylogenetic tree building

- ITSx

- Read demultiplexing

- Affiliation filters

- Affiliation postprocessing

- Abundance normalisation

- Convert Biom file to TSV file

- Convert TSV file to Biom file

- Cluster/ASV report

- Affiliation report

Main tools

Optional tools

Reads processing

Context

Denoising refers to the process of correcting sequencing errors that occur during high-throughput sequencing of amplicons.

These errors can originate from PCR amplification or from the sequencing platform itself (e.g., Illumina, long-read technologies,

or older 454 systems). They typically appear as spurious singletons or low-abundance variants that differ by only one or a few

bases from the actual biological sequences. Tools such as denoising.py in FROGS implement strategies such as clustering (e.g.,

with Swarm) or statistical inference (e.g., with DADA2) to distinguish real variants (ASVs) from sequencing noise.

How it does

This tool cleans raw sequencing data before denoising.

To achieve this, it relies on well-established preprocessing tools:

Then the tool can clustered sequences using either:

- Swarm, which groups sequences based on local connectivity and a user-defined distance (e.g., 1 nucleotide difference), producing robust clusters without relying on arbitrary global thresholds.

- DADA2, which applies a statistical error model to distinguish true biological sequences from sequencing errors, allowing inference of exact sequence variants with very high resolution.

Together, these steps ensure that downstream analyses are based on accurate, high-quality ASVs, reducing the impact of technical artifacts such as primer sequences, incomplete overlaps, or sequencing errors. It is possible to perform cleanup only with the

To achieve this, it relies on well-established preprocessing tools:

- Cutadapt is used to detect and remove sequencing primers or adapters from the reads.

- Delete sequences without good primers. In the context of amplicon data, primers must be trimmed because they can interfere with downstream clustering/denoising and taxonomic assignment.

Cutadapt also allows mismatches, making it robust for real-world data where primer binding is not always perfect.

- Merging of R1 and R2 reads with vsearch, flash or pear (only in command line) into single continuous sequences when their overlaps are sufficient.

- Delete sequence with not expected lengths

- Delete sequences with ambiguous bases (N)

- Dereplication

- + removing homopolymers (size = 8 ) for 454 data

- + quality filter for 454 data

Then the tool can clustered sequences using either:

- Swarm, which groups sequences based on local connectivity and a user-defined distance (e.g., 1 nucleotide difference), producing robust clusters without relying on arbitrary global thresholds.

- DADA2, which applies a statistical error model to distinguish true biological sequences from sequencing errors, allowing inference of exact sequence variants with very high resolution.

Together, these steps ensure that downstream analyses are based on accurate, high-quality ASVs, reducing the impact of technical artifacts such as primer sequences, incomplete overlaps, or sequencing errors. It is possible to perform cleanup only with the

--process preprocess-only option of denoising. N.B. : Long reads

DADA2 needs to see the same sequences multiple times to model sequencing errors. If almost all sequences are unique (as is often the case with very long PacBio reads), DADA2 cannot function properly because it does not have enough information to distinguish true errors from true biological variations.

If the duplication rate is less than 10% (i.e., if the number of unique sequences > 0.9 × the total number of reads), then DADA2 is not appropriate.

The knowledge of your data is essential. You have to answer the following questions to choose the parameters:

If the duplication rate is less than 10% (i.e., if the number of unique sequences > 0.9 × the total number of reads), then DADA2 is not appropriate.

The knowledge of your data is essential. You have to answer the following questions to choose the parameters:

- Sequencing technology?

- Targeted region and the expected amplicon length?

- Have reads already been merged?

- Have primers already been deleted?

- What are the primers sequences?

Configuration: Short reads (16S V3V4 use cases)

With Swarm :

mkdir FROGS

sbatch -J denoising -o LOGS/denoising.out -e LOGS/denoising.err -c 8 --export=ALL --wrap="module load devel/Miniforge/Miniforge3 && module load bioinfo/PEAR/0.9.10 && module load bioinfo/FROGS/FROGS-v5.0.2 && denoising.py illumina --input-archive data.tar.gz --min-amplicon-size 420 --max-amplicon-size 470 --merge-software vsearch --five-prim-primer ACGGRAGGCWGCAGT --three-prim-primer AGGATTAGATACCCTGGTA --R1-size 250 --R2-size 250 --nb-cpus 8 --html FROGS/denoising_swarm.html --log-file FROGS/denoising_swarm.log --output-biom FROGS/denoising_swarm.biom --output-fasta FROGS/denoising_swarm.fasta --process swarm && module unload bioinfo/FROGS/FROGS-v5.0.2"

sbatch -J denoising -o LOGS/denoising.out -e LOGS/denoising.err -c 8 --export=ALL --wrap="module load devel/Miniforge/Miniforge3 && module load bioinfo/PEAR/0.9.10 && module load bioinfo/FROGS/FROGS-v5.0.2 && denoising.py illumina --input-archive data.tar.gz --min-amplicon-size 420 --max-amplicon-size 470 --merge-software vsearch --five-prim-primer ACGGRAGGCWGCAGT --three-prim-primer AGGATTAGATACCCTGGTA --R1-size 250 --R2-size 250 --nb-cpus 8 --html FROGS/denoising_dada2.html --log-file FROGS/denoising_dada2.log --output-biom FROGS/denoising_dada2.biom --output-fasta FROGS/denoising_dada2.fasta --process dada2 && module unload bioinfo/FROGS/FROGS-v5.0.2"

denoising.py --help)

Interpretation: Short reads (16S V3V4 use cases)

Swarm Results

Let look at the HTML file to see the result of denoising.You have three panels: Denoising, Cluster distribution, and Sample distribution. Here, we will focus primarily on the Denoising panel.

Since the Cluster distribution and Sample distribution panels are common to multiple tools, a more detailed interpretation with vizualisation of these panels can be found in the Cluster Stat section .

This bar plot shows the number of sequences that do not pass the filters. At the end of the filtering process, we have 1,156,905 sequences.

Below you will find a table showing details on merged sequences. Display all sequences (1) and select all samples (2). You can then click on Display amplicon lengths (3) and Display preprocessed amplicon lengths (4).

We observe that the majority of sequences are ~425 nt long.

The other tabs give information about clusters. They show classical characteristics of clusters built with swarm:

- A lot of clusters: 142,515

- ~81.63% of them are composed of only 1 sequence

DADA2 Results

Let look at the HTML file to check what happened.- 87.39% of raw reads are kept (1,200,783 sequences from 1,374,011)

- 8,580 Clusters, ~23.24% of them composed of only 1 sequences

Configuration: Short reads (ITS use cases)

You can upload ITS data in here or with

wget .

wget https://web-genobioinfo.toulouse.inrae.fr/~formation/15_FROGS/current/ITS_fast.tar.gz

wget https://web-genobioinfo.toulouse.inrae.fr/~formation/15_FROGS/current/ITS_fast_replicates.tsv

sbatch -J denoising -o LOGS/denoising.out -e LOGS/denoising.err -c 8 --export=ALL --wrap="module load devel/Miniforge/Miniforge3 && module load bioinfo/PEAR/0.9.10 && module load bioinfo/FROGS/FROGS-v5.0.2 && denoising.py illumina --input-archive ITS_fast.tar.gz --R1-size 250 --R2-size 250 --mismatch-rate 0.1 --merge-software vsearch --keep-unmerged --min-amplicon-size 180 --max-amplicon-size 490 --five-prim-primer 'CTTGGTCATTTAGAGGAAGTAA' --three-prim-primer 'GCATCGATGAAGAACGCAGC' --process swarm --distance 1 --fastidious --nb-cpus 8 --html FROGS/denoising_its.html --log-file FROGS/denoising_its.log --output-biom FROGS/denoising_its.biom --output-fasta FROGS/denoising_its.fasta && module unload bioinfo/FROGS/FROGS-v5.0.2"

Interpretation: Short reads (ITS use cases)

Swarm Results

Let look at the HTML file to see the result of denoising.You have three panels: Denoising, Cluster distribution, and Sample distribution. Here, we will focus primarily on the Denoising panel.

Since the Cluster distribution and Sample distribution panels are common to multiple tools, a more detailed interpretation with vizualisation of these panels can be found in the Cluster Stat section .

This bar plot shows the number of sequences that do not pass the filters. At the end of the filtering process, we have 198,191 sequences. We also see the number of artificially recombined reads, 47,594 reads.

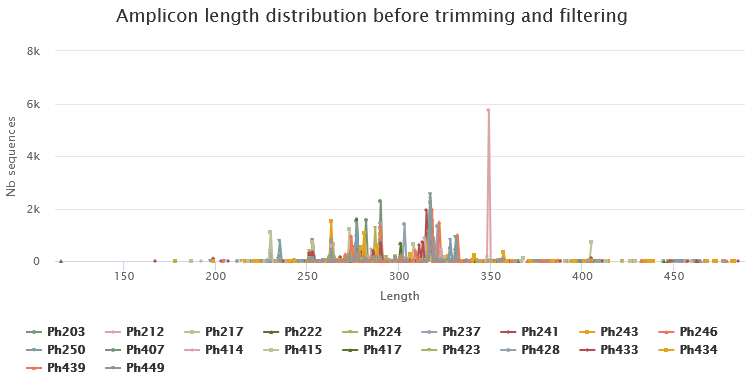

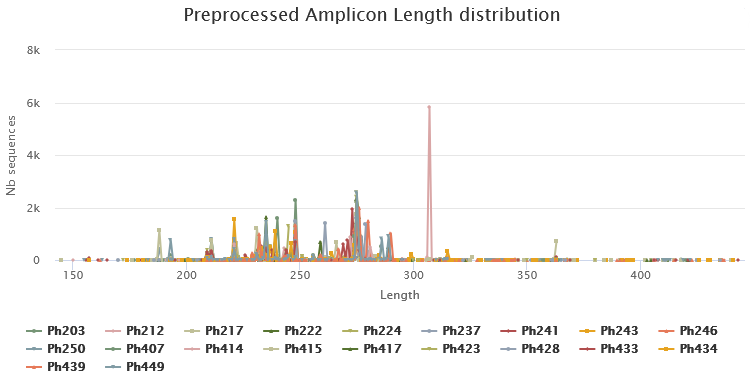

Below you will find a table showing details on merged sequences. Display all sequences (1) and select all samples (2). You can then click on Display amplicon lengths (3) and Display preprocessed amplicon lengths (4).

ITS sequence sizes vary greatly.

Details on artificial combined sequences

This table provides information on artificial combined sequences by sample.

The other tabs give information about clusters. They show classical characteristics of clusters built with swarm:

- A lot of clusters: 50,883

- 97.9% of them are composed of only 1 sequence